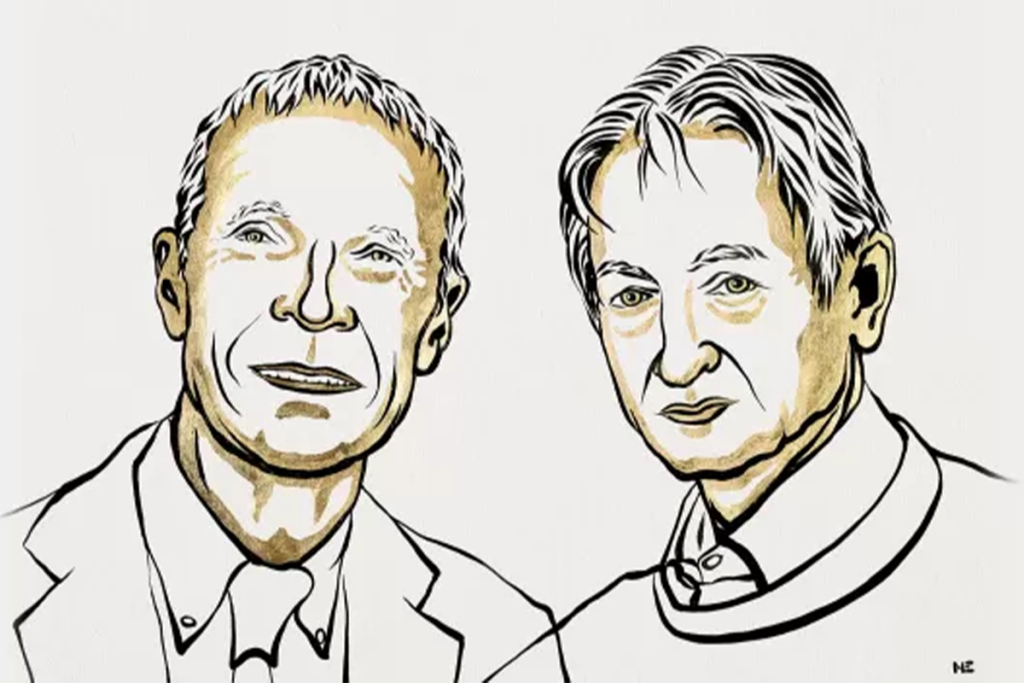

This year’s Nobel Prize for Physics has been awarded to scientists Emeritus Prof John Hopfield and Prof Geoffrey Hinton “for foundational discoveries and inventions that enable machine learning with artificial neural networks”.

Although they never directly collaborated, they were both inspired by the interface between biology and physics, building upon each other’s work to create the foundations of machine learning and artificial intelligence (AI). The inspiration for linking together networks of nodes to capture and process data came from brain biology, whereas the mathematics used to create and use these pathways came from molecular and statistical physics.

What are neural networks?

We have previously written about the difference between AI and machine learning, bringing in the analogy of the brain. Neurones are the cells responsible for receiving sensory input from the external world, for sending motor commands to our muscles, and for transforming and relaying the electrical signals at each step in between. They are a fundamental component of the nervous system. The brain contains a multitude of neurones linked by synapses (junctions that allow the neurons to communicate with each other). When we learn something, the connection between particular neurons and synapses becomes stronger. Otherwise, they become weaker.

What are artificial neural networks (ANNs)?

ANNs simulate the brain’s neural networks. Artificial neurons are arranged in a network. Each neuron is activated by an input signal that is then transferred from one artificial neuron to another within a network pathway. In this process, the input signal data is processed in a user-prescribed or machine-learned way. For example, the ANN may perform segmenting, classifying, sorting or predicting functions. It may also perform decision support and prediction functions and through machine learning, may even mimic the brain’s learning function.

Neurons in an artificial neural network are “activated” by input signals. These activations cascade from one artificial neuron to the next in ways that can transform and process the input information. As a result, the ANN can carry out computational tasks such as classification, prediction and decision-making. Like our brains, when the network is “trained” (like the brain process of learning), the connections between artificial neurons become stronger and otherwise become weaker. Through this process, the “machine” captures, data remembers it and learns from it.

For example, John Hopfield’s “Hopfield networks” were one of the first ANNs. These networks could memorise data (e.g. an image) that could later be recalled (e.g. when the network was activated and could identify a similar image by association). Geoff Hinton later extended Hopfield’s work to develop Boltzmann machines. These demonstrated machine learning, whereby a computer model could be trained to store data by being given examples of things for it to remember. Combined, this work showed that an ANN could learn.

How does this relate to HERA?

HERA is leading a number of projects involving AI and machine learning, all evolving out of the fundamental work of Hopfield, Hilton and a range of others. This includes our Construction 4.0 project, as well as a range of projects we are undertaking in collaboration with the Australian Composites Manufacturing CRC. We are building this capability and aim to be a national leader in AI related to fabrication and construction. We are conscious of the fast-paced nature of this research development and we are also conscious of Hinton’s ongoing concerns about the rate of change in this field and the need for greater AI regulation. We proceed cautiously with our eyes open to both the benefits and risks of AI in our research.

Disclaimer: this article was written by a person and not a bot!